After the biggest news from Google I/O 2025? We’ve got you covered. Having tuned into Google’s big event and watched the flood of AI announcements cascade in, one thing’s clear – Google is positioning itself as an AI brand now. From smarter search to virtual try-ons and glasses that whisper directions in your ear, here are the best 10 announcements in bite-sized format.

1. Gemini in Android XR glasses is finally here… sort of

Smart glasses have flirted with AI before (I’m looking at you Meta Ray-Bans), but Google’s latest Android XR push – now paired with Gemini – might actually make them useful enough to wear all the time.

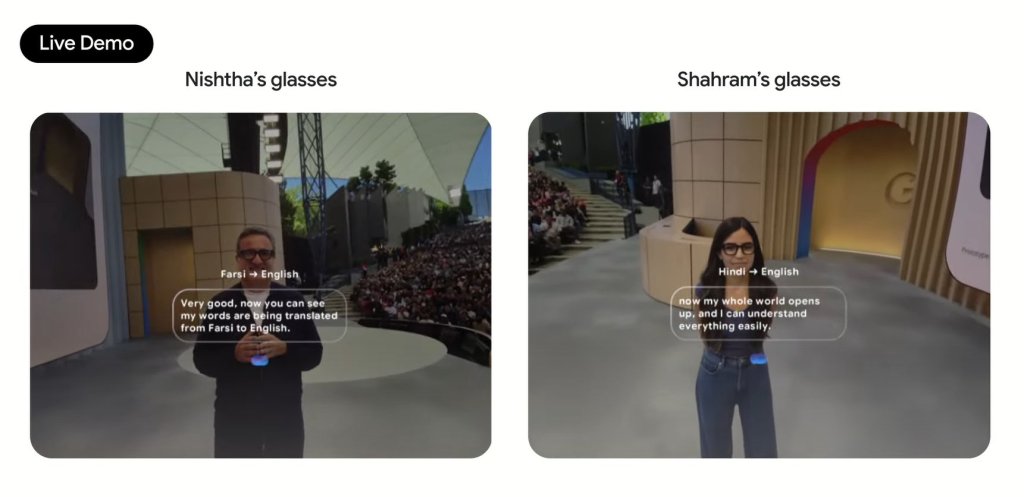

Running on a new Android XR platform, these glasses (developed with partners like Gentle Monster and Warby Parker) blend AI assistance with surprisingly wearable designs. They see and hear what you do, serve up helpful suggestions to an optional in-lens display, and keep your hands free while navigating your day. Whether you’re translating conversations in real time, firing off a text, or snapping photos with a blink, it’s like having a helpful assistant on your face.

Even better, Xreal is jumping into the mix too, with plans to bring its own glasses into the Android XR ecosystem. Expect a wave of Gemini-powered headsets and wearables later this year, starting with Samsung’s Project Moohan.

2. Veo 3 and Flow: film school in your pocket

Video making just got democratised in a big way. And I’m also slightly terrified. Veo 3 is Google’s newest generative video model and it doesn’t just render stunning 1080p scenes – it adds sound, too. We’re talking street ambience, background music, and even believable character dialogue, all from a prompt.

To go with it, Google launched Flow, a new AI filmmaking tool purpose-built for creatives. You can storyboard, manage assets like characters and props, and sequence scenes with cinematic polish, all by describing your ideas. There are even camera controls, continuity features, and reference styles to keep everything visually coherent. It’s available now for Google AI Pro and Ultra users in the US.

3. Imagen 4: finally, AI can spell

It’s a sad state of affairs when we get excited that an image generator can finally spell properly – but here we are. Imagen 4 isn’t just about better textures and photorealism (although it’s very good at both) – it also gets typography right.

Posters, comics, and slides should all be useable now. No more garbled nonsense text that makes your creations look like a ransom note. It’s fast, flexible with aspect ratios, and supports resolutions up to 2K, making it ideal for everything from Instagram flexing to full-blown print layouts. Imagen 4 is now live in the Gemini app and Workspace apps like Docs and Slides.

4. Jules can code so you don’t have to

Google’s take on the future of software development isn’t a sidekick. Jules is a full-blown autonomous coding agent that plugs into your existing repos, clones your project into a secure VM, and just… gets to work.

It writes new features, fixes bugs, updates dependencies, and even narrates the changes with an audio changelog. I absolutely love that last part. You can watch its reasoning, edit its plan on the fly, and stay in control without doing the actual slog. It’s powered by Gemini 2.5 Pro and available now in public beta globally, wherever Gemini is available.

5. Show, don’t tell with Gemini Live

We’ve all had those moments where describing the problem feels harder than fixing it. Gemini Live now lets you point your camera at whatever’s giving you grief – be it a form you don’t understand or a baffling piece of IKEA furniture – and talk it through.

With camera and screen sharing now available for free on Android and iOS, it’s already becoming an easy way to get help to your questions. Gemini Live will soon integrate with Google Maps, Calendar, Tasks and Keep too, meaning you can show it your dinner plan chaos and have it suggest a time, a place, and actually create the event.

6. AI Mode comes to Google Search

Google Search is now less “here are some results” and more “here’s your answer and I bought the tickets.” AI Mode is rolling out in the US with advanced reasoning, a multimodal interface, and the ability to follow up like an attentive conversation partner.

It can interpret long, detailed questions, and even handle real-world interactions – like analysing ticket listings or booking appointments. You can also shop smarter, with a visual browsing experience, virtual try-ons using your own photo, and an agentic checkout that’ll buy your item when the price dips.

Obviously, this is what Google sees as the future of search. While some of these features definitely seem useful, I’m not sure I’m sold on using them all the time. Fortunately, AI Mode exists alongside regular Search. For now, at least. But if the God-awful AI Overviews are anything to go by, Google will transition this to the default in the near future. Though, Google says people are actually using AI Overviews. So maybe it does know best.

7. Deep Think is Gemini’s new brainy mode

Gemini 2.5 Pro is already a monster of an AI model, but now it’s getting an experimental mode called Deep Think. Designed for tasks that require actual reasoning – like solving complex maths or competitive coding problems – it uses new techniques to consider multiple solutions before deciding what to say. It’s been tested on brutal academic benchmarks and is currently reserved for trusted testers, but the results so far are ridiculously impressive.

8. Personalised AI hits a new level (impressive or creepy, you decide)

Google is finally putting all that data it’s quietly been collecting – sorry, respectfully managing – to actual good use. With your permission, Gemini can pull in personal context from Gmail, Drive, Calendar, and more to provide answers that actually reflect your life.

New Smart Replies in Gmail promise to match your tone and include info from old itineraries or past messages. Deep Research now lets you add your own documents for richer insights, and Canvas lets you turn those insights into apps, visuals, even podcasts. It’s personalisation that actually feels useful, not just creepy. Although the personalised replies I’ve used in Superhuman haven’t been all that helpful, so hopefully Google does a better job.

9. Google Beam is actually real… which means holograms are a step closer

We’ve all been on too many soul-sucking video calls that leave us staring at a pixelated freeze-frame of our own disappointment at this point. Beam wants to change that. Born from the now-retired Project Starline, it’s a new 3D video platform that uses 6 cameras and real-time rendering to make it feel like you’re actually in the room with someone.

Facial expressions, eye contact, and body language all gets captured and displayed with millimetre-precise head tracking on a 3D lightfield screen. Think Apple’s Personas from the Vision Pro headset. The first Beam devices are coming later this year in partnership with HP. And while it’s not quite a hologram yet, it does put us one step closer. Which is undeniably cool.

10. Already shop online? Now you’ll never leave the house

Online shopping is equal parts convenience and chaos. But Google’s new AI Mode makes it feel more like chatting with a knowledgeable shop assistant. Say you’re looking for a bag that’ll hold up in rainy weather. AI Mode fans out multiple searches, checks waterproofing, capacity, and brand ratings, then shows you a visual panel of curated suggestions. It can bring in Personal Context, so if you’re shopping for dog or kid toys, it’ll know their name.

But the best part has to be the fact that you can now try clothes on virtually using a photo of yourself. A number of small startups have been working on this problem, but now it’s baked right into Google. The fitting rooms are the worst part of going shopping (and there are many), so this makes things more convenient than ever. And when you’re ready to buy, an agentic checkout will handle it via Google Pay. It’s live in Search Labs in the US today and will roll out to more users soon.

If there’s one theme from Google I/O 2025, it’s that the search giant doubling down on making AI useful, not just smart. With so many of these tools already live or landing soon, it’s clear Google is done teasing and ready to deliver. In fairness, some of Google’s newest announcements are undeniably impressive.

But AI fatigue is definitely setting in. And I can see a real possibility where Google Search gets ruined (even more) in the near future. So watch this space for whatever comes next.

Read the full article here